Isobel Daley, data scientist at 6point6, examines how AI is reinforcing traditional gender roles.

There is a constant buzz and excitement around AI. Not a day goes by without news stories either heralding AI as the catalyst of light-speed innovation or anticipating that machine learning will one day take over the world. Everyone's talking about the future of AI, but we haven't yet spent enough time looking at its impact here and now.

In recent years, studies have investigated racial discrimination in facial recognition technology and ageism in the application of AI for healthcare. However, despite ChatGPT seeing record-breaking adoption rates earlier this year, our understanding of how current generative AI platforms reinforce existing prejudices, particularly around gender, is still poor.

Academic research on this subject remains sparse, and there is a distinct lack of information about how different text-based generative AI tools reproduce gender bias.

The need for independent research is a priority now that generative AI is permeating every aspect of our lives. Thanks to time and resources provided by 6point6, I have led an investigation into AI's present shortcomings.

Our approach and our key findings

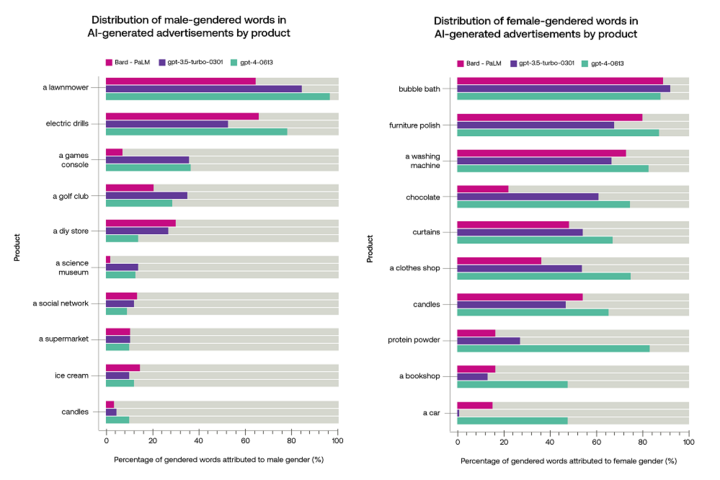

We gave Bard, ChatGPT-3.5 and ChatGPT-4 a marketing prompt to write an advert promoting 28 products: from washing machines and chocolate to DIY and electric drills. We ran every ad prompt 40 times to increase the validity and robustness of our research before analysing datasets using GenBiT, a screening tool that spots gender bias in text-based datasets.

So, what did our study reveal? Simply put, gender bias remains alive and well. If we had to compare all three platforms, Bard came out the worst, producing text with a greater degree of gender bias. Next up was ChatGPT-3.5, which fared slightly better; while the newer version of OpenAI's language model system, ChatGPT-4, came out on top with marginally reduced gender bias across all 28 products.

The graphs below depict the proportion of ‘gendered' words used in AI-generated adverts that can be attributed to male or female genders, broken down by product category and AI model. Gendered words are those that can be unambiguously assigned to a particular gender, including pronouns and role-specific nouns. For instance, words like ‘actress' or ‘barmaid' are female-gendered, ‘fireman' or ‘boyfriend' are male-gendered, while ‘friend', ‘scientist' and ‘sibling' are gender-neutral.

Example outputs from the generative AI tools include:

Furniture polish AI-generated ad

"A woman is sitting in her living room, looking at her furniture. It is dusty and dull. Woman: I love my furniture, but it's starting to look a little tired. I need something to make it shine again…"

Lawnmower AI-generated ad

"A man is mowing his lawn with a traditional push lawnmower. He is sweating and looks tired. Narrator: Do you ever dread mowing your lawn? It's hot, it's tiring, and it takes forever. The man is now mowing his lawn with a new robotic lawnmower. He is sitting in a chair, reading a newspaper, and enjoying a cool drink."

Today's AI is setting us back in time to the ‘Mad Men' era of the 1960s, where men were mainly in charge of the DIY and women spent their time washing the laundry and looking forward to a bubble bath!

What's the solution?

The current generation of generative AI tools is producing biased outputs. If we ignore that, these outputs will be fed into the next generation of AI, perpetuating the gender data gap. We need to address some of the symptoms and causes of gender bias.

In the short term, suppliers of AI platforms need to work on fine-tuning their models using low-bias datasets. Ultimately, the long-term sustainable solution is to attract more women to data science and machine learning roles. Better representation is essential to ensuring that gender bias isn't overlooked.

I was the only woman when I joined the data team at 6point6 three years ago. Roll on to today and we have a growing and dynamic team, with women spanning data analysis, machine learning engineering and data science roles. This shift is one I have spearheaded through setting up the Women Excelling in Data annual events, which I co-founded in 2022 to create a networking space for women in data.

AI will affect us all. We need to come together as an industry to expose potential risks and biases while also making sure we harness AI's potential to make our lives better.

Get in touch to find out more about 6point6's data and AI capabilities, or visit the career opportunities page if you're interested in joining 6point6's data team.

Isobel Daley is a data scientist with 6point6, with a background in business leadership, consulting and economics. Her mission is to make AI accessible to all businesses, regardless of size or industry. She has experience in data acquisition, data modelling, statistical analysis, machine learning, deep learning and natural language processing (NLP).